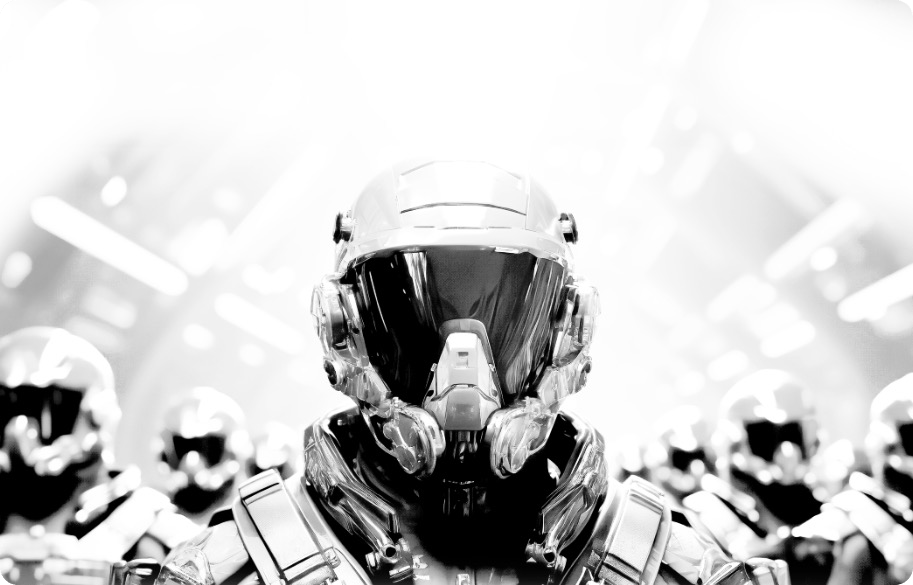

Shadow AI

The Hidden Risks of Unauthorized AI Use in the Workplace

Artificial intelligence (AI) is reshaping how we work—but not always in ways businesses can control. While organizations race to adopt enterprise-grade AI tools, a parallel trend is emerging under the radar: employees using unapproved AI platforms without IT oversight. This growing phenomenon, dubbed “Shadow AI,” mirrors the earlier problem of Shadow IT, where employees would install unvetted apps or services to boost productivity. But unlike past software risks, Shadow AI introduces a new level of security, compliance, and ethical challenges that businesses can’t afford to ignore.

What Is Shadow AI?

Shadow AI refers to any artificial intelligence application—whether it’s a chatbot, content generator, or data analysis tool—used by employees without formal IT approval or governance. Tools like ChatGPT, Midjourney, and Bard are now easily accessible online, making it simple for employees to experiment with generative AI for tasks like email drafting, code writing, or brainstorming.

The appeal is clear: AI boosts efficiency and creativity. But this unsanctioned use can have dangerous consequences.

The Hidden Risks Behind the Tools

When employees plug proprietary data into AI platforms without proper vetting, they may be unknowingly leaking sensitive information to third-party systems. Many free or consumer-grade AI platforms collect usage data to further train their models—creating a gray area around data ownership and privacy.

Beyond data leakage, Shadow AI also poses risks to compliance and accountability. For example, in industries with strict regulatory frameworks (like healthcare or finance), using unvetted AI tools can lead to violations of data protection laws such as HIPAA or GDPR. It can also muddy the waters of decision-making accountability: if an employee relies on an AI-generated response and it turns out to be wrong or biased, who’s responsible?

Moreover, AI outputs—especially from large language models—can reflect biases, generate false information, or make inappropriate suggestions. Without review protocols in place, the risk of relying on flawed outputs grows significantly.

The Case for AI Governance

To address Shadow AI, organizations need to act proactively—not punitively. Employees often turn to unauthorized tools out of a desire to work more efficiently, not malicious intent. The right strategy is not to ban all AI tools, but to establish a clear framework for their safe and effective use.

That starts with implementing an AI governance policy that includes:

- Clear guidelines on approved tools: Create a vetted list of AI applications employees can use, along with clear instructions on what data is appropriate to share.

- Training and education: Ensure staff understand the limitations and risks of AI tools, and how to critically assess outputs before acting on them.

- Monitoring and auditing: Use existing IT infrastructure to monitor AI usage patterns and detect unauthorized tools.

AI has tremendous potential to transform business operations—but only if it’s implemented securely. As we enter 2025, Shadow AI is no longer a fringe concern; it’s an active security risk. Businesses must foster a culture of innovation alongside a strong foundation of trust, security, and accountability.

By acknowledging the presence of Shadow AI and setting up clear governance, organizations can harness the power of AI while safeguarding their people, data, and reputation.

Source: Reveald Blog

Dan Singer | CEO

Seasoned executive with over 20 years of tech industry leadership, distinguishing himself through strategic roles across cybersecurity, system integration and broad-spectrum consultation.

The force multiplier for security teams.

Welcome to the new age of predictive cybersecurity.

Leverage the power of AI to discover and prioritize cybersecurity risks, vulnerabilities and misconfigurations across your entire environment